Unveiling Quadrigram

Posted: June 28th, 2012 | 2 Comments »So for the last 8 months I have been working almost exclusively with my friends at the information visualization consulting company Bestiario on new tools to visualize information. Last year, based on our joint experience, we detected two increasing demands within innovative institutions. First the wish to think with liberty with data, outside of coding, scripting, wizard-based or blackbox solutions. Then, we perceived the necessity to diffuse the power of information visualization within organizations to reach the hands of people with knowledge and ideas of what data mean.

Our efforts have now culminated into Quadrigram, a Visual Programming Environment to gather, shape and share living data. By living data we mean data that are constantly changing and accumulating. They can come from social network, sensor feeds, human activity, surveys, or any kind of operation that produce digital information.

For Bestiario and its long track record in ‘haute couture’ interactive visualizations, Quadrigram offers ‘prêt-à-porter’ solutions for organizations, consultants, analysts, designers and programmers working routinely with these types of data. As with other services, data visualization plays a central role in the making sense and sharing of complex data.

I got the chance to work on multiple conceptual, engineering and strategic aspects of Quadrigram. In this post I summarize four most main areas I had the pleasure to shape in collaboration with Bestiario:

1) Redefining work with data

For us at Near Future Laboratory it made sense in helping Bestiario with our experience in prototyping solutions that become feedback loops where our clients can actually figure something out. Indeed, more and more results of our investigations became interfaces or objects with a means of input and control rather than only static reports. The design of Quadrigram lays on this very idea of ‘feedback loop’ and provides a WYSIWYG (What you see is what you get) interface. It is designed for iterative exploration and explanation. Each iterations or “sketches” is an opportunity to find new questions and provide answers with data. Data mutate, take different structure in order to unveil their multiple perspectives. We like to think that Quadrigram offers this unique ability to manipulate data as a living material that can be shaped in real time or as Mike Kuniavsky nicely describes in Smart Things: Ubiquitous Computing User Experience Design: “Information is an agile material that needs a medium”. And this not only concerns ‘data scientists’ but rather everybody with knowledge and ideas in a work that involves data.

With the diffusion of access to data (e.g. the open data movement), our investigation with data has become utterly multi-disciplinary. Nowadays, our projects embark different stakeholders with fast prototyped tools that promote the processing, recompilation, interpretation, and reinterpretation of insights. For instance, our experience shows that the multiple perspectives extracted from the use of exploratory data visualizations is crucial to quickly answer some basic questions and provoke many better ones. Moreover, the ability to quickly sketch an interactive system or dashboard is a way to develop a common language amongst varied and different stakeholders. It allows them to focus on tangible opportunities of product or service that are hidden within their data. I like to call this practice ‘Sketching with Data‘, others such as Matt Biddulph talks about “Prototyping with data” (see also Prototyping location apps with real data). Regardless of the verb used, we suggest a novel approach to work data in which analysis and visualizations are not the unique results, but rather the supporting elements of a co-creation process to extract value from data. In Quadrigram, the tools to sketch and prototype took the form of a Visual Programming Environment.

The teaser video summarize the vision behind Quadrigram

2) Reducing the barriers of data manipulation

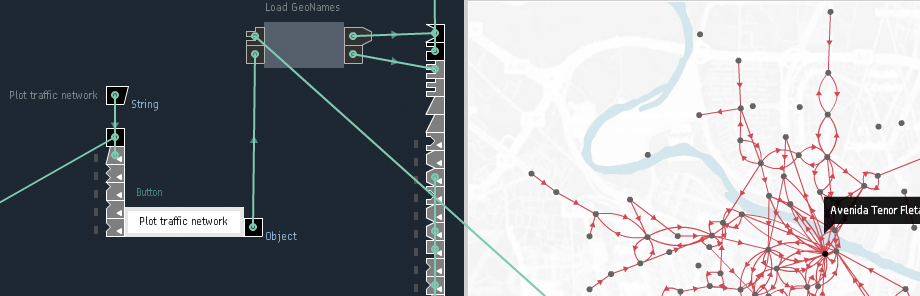

Visual Programming Environments have flourished in the domain of information technologies, starting with LabVIEW in the 80s and then spreading to the emerging fields mixing data with creativity such as architecture, motion graphic and music. In these domains, they have demonstrated virtues in reducing the barrier of entry for non-experts (check the VL/HCC community for more on the topic). In the Visual Programming Environment we developed, users manipulate in an interactive way pre-programmed modules represented as graphical elements. When connected, these modules form a ‘data flow’ (also called dataflow programming) that provide a constant visual awareness the result of the program (“What You See Is What You Get”) ideal for quick “trial and error” explorations. This way the tool allows for the evaluation of multiple pathways towards the correct solution or desired result. It inspires solution-finding for non-technical professional by exposing the full flow of data.

The take a tour video presents the Visual Programming Environment that offers a transparent way of setting up a solution, that contrast with wizard-based environments and their “black boxes”.

3) Creating a coherent language

A major challenge when grouping tools to work with data within a common Visual Programming Environments has been to define basic building blocks of a language. Starting from scratch, we used an exploratory phase that led to the release of an experimental environment called Impure and its large sets (500) of diverse modules. This free solution generated a decent community of valorous 5000 users. We used Impure as testbed for our ideas and perform the necessary user studies to come up with a coherent basic language. We particularly focused on specific action verbs (what people can do see Verbs and design and verbs) that enclose the most common operations on data: sort, search, insert, merge, count, compare, replace, remove, filter, create, get, cluster, encode, decode, convert, accumulate, split, resize, set, execute, load, save. These actions are performed on Data Structures (e.g. create List, sort Table, replace String, cluster Network, compare Date, resize Rectangle, load Image, …) within specific domains (e.g. Math, Geography, Statistics, …). The language is complemented with a growing list of Visualizers categorized according to their objectives to reveal aspects about the data (e.g. compare, contextualize, relate, …). Through this structure (actions – structure – domain) user can find the appropriate module within a very dense and diverse toolset.

This exploratory analysis video shows how a unique language provides similar perspectives in the same dataset.

4) Steering the development of an environment that takes advantage of an ecosystem of great tools

Bestiario’s CEO José Aguirre always like to present Quadrigram as a sponge capable of absorbing information from many diverse sources: social networks, data bases, Internet of Things, social media tools, business analytics tools, etc. stressing that “In the wild we know that it is not the strongest who survive but rather those who best cooperate”. We brought that vision to reality with an environment based on severs ‘in the cloud’ that integrates with other sophisticated tools. Like many other platforms, Quadrigram connects to various types of data sources (databases, APIs, files, …) to load data within a workspace. But we also wanted users with detailed needs to take advantage R scripting to perform advanced statistical method or Gephi to layout large networks. The main challenge was to find and implement a protocol to communicate Quadgrigram data structure back and forth with these great tools. In other words, we wanted users to perform analysis in R as part of their data flow. Similar to the architecture of distributed systems and the used of JSON nowadays, the solution was to pass around serialized Quadrigram objects. That offers a pretty unique mechanism to store and share results of data manipulations, what we call “memories”. For instance the content of a Table stored in Quadrigram server is available publically to other tools via a URL (e.g. http://nfl.quadrigram.com/account/m/ext/memo/public/fabien/cosm/cpu store an analysis of my CPU activity)

Why do I blog this: It has been a unique opportunity to help shape a software product and bring it to market. When we created Lift Lab and now Near Future Laboratory we knew if was the kind of experience we wanted to live. This post is an attempt to keep track of the work performed to make Quadrigram a tool that we hope will open new practices around the manipulation and visualization of data. Thanks to the team at Bestiario for their talent and stimulating discussions. I will continue contributing to the project with constant technical, strategic and conceptual guidance. I have also jumped in the advisory board in company of Bernando Hernandez and Jaume Oliu.